deathshadow

Veteran Member

- Joined

- Jan 4, 2011

- Messages

- 1,378

Just thought I'd share what I've been working on.

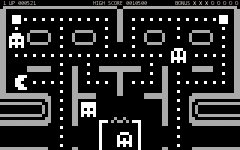

For a while now I've been working on speeding up my 160x100 game engine -- the first release that's going to make use of it is my upcoming "it's done when it's done" Paku Paku 2.0 -- pretty much a total rewrite of the game from scratch.

I learned a lot writing version 1.x, as well as with my coded sprites demo that came after -- writing a version for the C64 also made me "rethink my ink" and change out a lot of methodology... but more than anything, working with an actual unexpanded PCJr has made me realize I need to squeeze even more out of the engine.

The first step was switching from TP's inline assembler to using NASM. Turbo Pascal adds some overhead to the inline assembler that we don't always need and makes it's own changes to what you code as it thinks appropriate -- using a "real" assembler let's me micro-manage what's really being done. (like do I really need to dick with BP and SP when I'm not passing parameters?) Laughably that alone seems to shave anywhere from 16 to 128 bytes off EVERY function... Which surprised me a lot.

One of the big things I've found in testing is that reading Jr memory is slower than writing -- noticeably so. This means that a RLL type compression of data can pay off bigtime.

Which also works with my other goal for Paku 2.0 and the three other games I'm working on... that being getting the memory footprint below 64k. I figure if I can have a 40k memory footprint on a C64 version, there's no excuse for the current 93k version 1.6 uses.

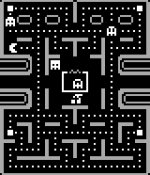

The existing engine for Paku Paku renders 4x3 tile sprites to the screen from a 28x31 list of tiles. A copy of that same 28x31 tile list is used for the gameplay map to determine if pellets have been eaten or not and where walls are. This means that at start of the game, AND when I'm trying to render the 'greyscale' flash at the end of the game, it has to go through all 868 tile locations to render the appropriate 4x3 tile (actually 3x3 with a shift). Also means each tile has TWO copies since the data is 3 pixels wide, and shifting by 4 in realtime is rubbish.

The first major change I've made is to create a separate copy of the playfield as it appears in the backbuffer from the tile sheet. This means I can blit the bitmap to the backbuffer or screen faster -- the latter being ideal for the "blink" betweeen blue and greyscale at the end of each level. The intent being that I can encode a 'master copy' of the play layout and the bitmap version, and simplify the playfield table.

My first attempt at encoding was a bit overboard, attempting LZW, LZH and LZ4 -- and in all cases finding the decoder to be bigger than the reduction in file size. Worse the implementation for most such progressive encoders involves going back and copying from what's already been written; completely negating any possible "speed" you could gain from the reduction in size. -- So much for those.

Next I tried a simple two-byte RLL... First byte being 'how many', second byte being 'of what'. The ability to "lodsw; mov cl, ah; rep stosb;" works well, but the increase in reads and number of unique values meant that speed wise and size-wise I figured I could do better.

So I went in and started playing with multi-byte RLL. In multi-byte you use a bit or two as a trigger to say "this is a repeat" vs. "this is just data" -- the problem was that the data in question is mostly using all the bits too much for that to really offer any savings... unless I did a lookup table.

AHA. If I use a lookup table I can have TWO tables, one for the blue version, one for the greyscale... Which started me looking at the data itself.

Really the bitmap for screen/buffer only has four pixel values if I don't render the pellets... if I make the pellets separate (another EUREKA! moment) that only has TWO values and results in longer runs of empty pixels

So basically for pixel data we have:

L = high intensity border (light blue or white),

H = low intensity border (dark blue or dark grey),

M = middle intensity border (exit for ghost jail, magenta or grey)

0 = empty

Since that's two pixels per byte, the possible combinations become:

LL, HH, 00

0L, L0, 0H, H0,

LH, HL,

LM, MM, ML

Looking deeper, I realized that only three states actually need 'run lengths' -- LL, HH and 00 -- 90%+ of the data lines up on those. If I use the top two bits as the triggers I can use the bottom 6 bits for how many to repeat or the desired value. The LM, MM and ML always appear in the order LM MM MM ML, so I could reduce that four byte run to a single byte. The backbuffer is also a different width from the screen and I want the ability to decode to both -- solution there? Add a 'end of line' character, and if we're gonna have EOL, might as well put in "end of data" so we don't have to run a counter. Also for the repeat of 0, I choose to skip writing to the screen and just increment the buffer offset, speeding it up even more since for setting up gameplay we can just do a buffer clear first, and for writing the 'blink' we don't want to modify the 'blank' parts anyways.

; Binary

; x = count

; . = don't care

01xx xxxx == repeat 00

10xx xxxx == repeat LL

11xx xxxx == repeat HH

00.. .000 == 0L

00.. .001 == L0

00.. .010 == 0H

00.. .011 == H0

00.. .100 == LH

00.. .101 == HL

00.. .110 == LM MM MM ML

00.. .111 == EOL

else == EOD

This encoding is pretty efficient for the amount of compression... but checking the top two bits with multiple nested TEST and CMP wasn't exactly great from a decoder speed point of view... having to "AND" off the extra bits certainly wasn't helping -- if only there was a way to test bits AND do the compare in one operation without corrupting the count...

I sat there thinking about it, how can I extract the state more efficiently -- then it hit me; flip it around. Move the state to the bottom bit... then I can do a shift, and jump on carry. The drop through on the conditional jump being the most likely of values in order. A few extra cycles for HH or the single bytes doesn't matter if the long runs of 'nothing' and LL can have less overhead.

xxxx xxx0 == repeat 00

xxxx xx01 == repeat LL

xxxx x011 == repeat HH

0000 0111 == 0L

0001 0111 == L0

0010 0111 == 0H

0011 0111 == H0

0100 0111 == LH

0101 0111 == HL

...0 1111 == EOL

..01 1111 == LM MM MM ML

..11 1111 == EOD

So for example: (NASM code)

With this as the decoder:

ROCK AND ROLL. Delivers around 3.5:1 compression, specific to this data -- AND it blits fast enough to be acceptable on the Jr.

Once encrypted, it became apparant with the EOL's in place how often the map repeats itself. With the above decoder actually working out faster than I need, I started thinking on how to reduce the redundancies in the data... finally I just said "fine, we'll use a lookup table for each line"

The line data being something like this:

... and so forth.

I make the last value in mapLineList 0 as a easy out.

Changing the above decoder to handle this was simple.

Didn't impact performance significantly, but reduced the data size another ~40%... net result is basically taking 3,822 bytes of data, and reducing it to 632 bytes. UAH... though I'm still arguing with myself over push/pop on SI and using DX to store HHLL for the repeat blits. I'm not sure those handful of [bx + #] calculations on those two runs is worth the effort.

Converting it to write to screen being as simple as adding a "inc di" after each stosb, unrolling the REP into a LOOP of same, and increasing the EOL addition to 76 (since the screen is 160 bytes wide).

I also went a little further and added more 'unique' multi-byte runs to the xxxx1111 list... specifically there are a LOT of cases where L00L or 0LL0 show up and reducing those to a single byte shaved a few more bytes off it.

I did try adding another shift to allow for stosw instead of stosb, but the runs aren't long enough for that extra code and overhead to actually pay off where it's needed. A lot of optimizations I'd make on larger data sets seem to be working out like that.

Using similar methods to draw the pellets and build the map used by the actual game engine for collisions has reduced the memory footprint of the game around 8k and made it run far, far faster.

For example the pellet encoding is even simpler:

xxxx xxx0 == skip x

xxxx xx01 == repeat 0x70 0x07 (0x0770) skip 1

.... .011 == 0x07 skip 1

.... 0111 == 0x70

I render a normal pellet at the super-pellet locations, since those have their buffer offset and screen offsets hardcoded due to their blink animations. Storing 0x0770 in DX speeds this up a bit (so I'm not using immediates inside the loop), though really there's no reason to speed up how fast the pellets are drawn since they're only done at the start of each level.

The playmap encoding is similar as well.

xxxx xxx0 == wall x

xxxx xx01 == pellets x

xxxx x011 == empty x

.... 0111 == super pellet

.... 1111 == ghost wall (2 bytes)

Even simpler. The values written:

empty 0x00

pellets 0x01

super pellet 0x03

wall 0x80

ghost wall 0xC0

Lets me quickly test for walls by checking the sign flag, or pellets with bit 0 using a simple "and al, 0x83" followed by jz "empty" and js "wall", the drop-through being "eat a pellet" which then also drops-through to "empty" to allow navigation.

Much like with the pellets the live gameplay map only needs to be written at the start of each level, so optimization efforts went more towards code and data size than they did speed... even though laughably this is far faster than what paku 1.x is doing.

Though with the unexpanded 128k Jr. as my current target minimum, smaller code size most of the time does mean faster, even when it's slower when run on a real PC. I'm hoping these changes will be enough to let the unmodified low-ram Junior run the game as good as a real PC from the same codebase. If it doesn't reach that goal I'm going to probably have to make a Junior specific version using Trixter's Jr. specific mode, since the linear screen buffer would reduce the overhead of blitting a LOT... and I could use page flipping instead of a manual backbuffer. HOPING I won't have to do that though as I'd like 2.0 to be self contained.

I'm also playing with my joystick read routine to make it a bit better on the Jr. Turns out the interrupt problem isn't entirely what's wrong with how I was doing it, it was just alleviating problems in the 'dead zone' common when you have a low return value as 'center'. Pretty much if the center is less than 10, I need to make sure the dead zone is only 3 wide.

Stickmask is determined during startup as to which 4 axis to test. Doing an "and' of it's value against what's read from the port masks off ports that aren't connected. Side effect of the detection code I use for this is that when machines are 'too fast' for this stick reader the joystick is disabled. (that's actually a good thing!)

In any case, just thought I'd share what I've been up to and get it down somewhere. Sometimes just writing it down helps with debugging and thinking on new ways of handling things.

Also, never hurts to have another set of eyes on things. Any suggestions are more than welcome.

For a while now I've been working on speeding up my 160x100 game engine -- the first release that's going to make use of it is my upcoming "it's done when it's done" Paku Paku 2.0 -- pretty much a total rewrite of the game from scratch.

I learned a lot writing version 1.x, as well as with my coded sprites demo that came after -- writing a version for the C64 also made me "rethink my ink" and change out a lot of methodology... but more than anything, working with an actual unexpanded PCJr has made me realize I need to squeeze even more out of the engine.

The first step was switching from TP's inline assembler to using NASM. Turbo Pascal adds some overhead to the inline assembler that we don't always need and makes it's own changes to what you code as it thinks appropriate -- using a "real" assembler let's me micro-manage what's really being done. (like do I really need to dick with BP and SP when I'm not passing parameters?) Laughably that alone seems to shave anywhere from 16 to 128 bytes off EVERY function... Which surprised me a lot.

One of the big things I've found in testing is that reading Jr memory is slower than writing -- noticeably so. This means that a RLL type compression of data can pay off bigtime.

Which also works with my other goal for Paku 2.0 and the three other games I'm working on... that being getting the memory footprint below 64k. I figure if I can have a 40k memory footprint on a C64 version, there's no excuse for the current 93k version 1.6 uses.

The existing engine for Paku Paku renders 4x3 tile sprites to the screen from a 28x31 list of tiles. A copy of that same 28x31 tile list is used for the gameplay map to determine if pellets have been eaten or not and where walls are. This means that at start of the game, AND when I'm trying to render the 'greyscale' flash at the end of the game, it has to go through all 868 tile locations to render the appropriate 4x3 tile (actually 3x3 with a shift). Also means each tile has TWO copies since the data is 3 pixels wide, and shifting by 4 in realtime is rubbish.

The first major change I've made is to create a separate copy of the playfield as it appears in the backbuffer from the tile sheet. This means I can blit the bitmap to the backbuffer or screen faster -- the latter being ideal for the "blink" betweeen blue and greyscale at the end of each level. The intent being that I can encode a 'master copy' of the play layout and the bitmap version, and simplify the playfield table.

My first attempt at encoding was a bit overboard, attempting LZW, LZH and LZ4 -- and in all cases finding the decoder to be bigger than the reduction in file size. Worse the implementation for most such progressive encoders involves going back and copying from what's already been written; completely negating any possible "speed" you could gain from the reduction in size. -- So much for those.

Next I tried a simple two-byte RLL... First byte being 'how many', second byte being 'of what'. The ability to "lodsw; mov cl, ah; rep stosb;" works well, but the increase in reads and number of unique values meant that speed wise and size-wise I figured I could do better.

So I went in and started playing with multi-byte RLL. In multi-byte you use a bit or two as a trigger to say "this is a repeat" vs. "this is just data" -- the problem was that the data in question is mostly using all the bits too much for that to really offer any savings... unless I did a lookup table.

AHA. If I use a lookup table I can have TWO tables, one for the blue version, one for the greyscale... Which started me looking at the data itself.

Really the bitmap for screen/buffer only has four pixel values if I don't render the pellets... if I make the pellets separate (another EUREKA! moment) that only has TWO values and results in longer runs of empty pixels

So basically for pixel data we have:

L = high intensity border (light blue or white),

H = low intensity border (dark blue or dark grey),

M = middle intensity border (exit for ghost jail, magenta or grey)

0 = empty

Since that's two pixels per byte, the possible combinations become:

LL, HH, 00

0L, L0, 0H, H0,

LH, HL,

LM, MM, ML

Looking deeper, I realized that only three states actually need 'run lengths' -- LL, HH and 00 -- 90%+ of the data lines up on those. If I use the top two bits as the triggers I can use the bottom 6 bits for how many to repeat or the desired value. The LM, MM and ML always appear in the order LM MM MM ML, so I could reduce that four byte run to a single byte. The backbuffer is also a different width from the screen and I want the ability to decode to both -- solution there? Add a 'end of line' character, and if we're gonna have EOL, might as well put in "end of data" so we don't have to run a counter. Also for the repeat of 0, I choose to skip writing to the screen and just increment the buffer offset, speeding it up even more since for setting up gameplay we can just do a buffer clear first, and for writing the 'blink' we don't want to modify the 'blank' parts anyways.

; Binary

; x = count

; . = don't care

01xx xxxx == repeat 00

10xx xxxx == repeat LL

11xx xxxx == repeat HH

00.. .000 == 0L

00.. .001 == L0

00.. .010 == 0H

00.. .011 == H0

00.. .100 == LH

00.. .101 == HL

00.. .110 == LM MM MM ML

00.. .111 == EOL

else == EOD

This encoding is pretty efficient for the amount of compression... but checking the top two bits with multiple nested TEST and CMP wasn't exactly great from a decoder speed point of view... having to "AND" off the extra bits certainly wasn't helping -- if only there was a way to test bits AND do the compare in one operation without corrupting the count...

I sat there thinking about it, how can I extract the state more efficiently -- then it hit me; flip it around. Move the state to the bottom bit... then I can do a shift, and jump on carry. The drop through on the conditional jump being the most likely of values in order. A few extra cycles for HH or the single bytes doesn't matter if the long runs of 'nothing' and LL can have less overhead.

xxxx xxx0 == repeat 00

xxxx xx01 == repeat LL

xxxx x011 == repeat HH

0000 0111 == 0L

0001 0111 == L0

0010 0111 == 0H

0011 0111 == H0

0100 0111 == LH

0101 0111 == HL

...0 1111 == EOL

..01 1111 == LM MM MM ML

..11 1111 == EOD

So for example: (NASM code)

Code:

normalMap:

db 0x01, 0x10, 0x09, 0x90, 0x19, 0x91, 0x11, 0x99, 0x15, 0x55, 0x51

whiteMap:

db 0x08, 0x80, 0x0F, 0xF0, 0x8F, 0xF8, 0x88, 0xFF, 0x87, 0x77, 0x78With this as the decoder:

Code:

; ASSUMES:

; si points to encoded map

; di points to backBuffer

; bx points to normalMap or whiteMap

xor ax, ax

xor cx, cx

.nextByte:

lodsb

shr al, 1

jc .xxxx_xx?1

; xxxx_xxx0 skip

add di, ax

jmp .nextByte

.xxxx_xx?1:

shr al, 1

jc .xxxx_x?11

; xxxx_xx01 repeat LL

mov cl, al

mov al, [bx + 6]

rep stosb

jmp .nextByte

.xxxx_x?11:

shr al, 1

jc .xxxx_?111

; xxxx_x011 repeat HH

mov cl, al

mov al, [bx + 7]

rep stosb

jmp .nextByte

.xxxx_?111:

shr al, 1

jc xxx?_1111

; xxxx_0111 table lookup one byte

xlat

stosb

jmp .nextByte

.xxx?_1111:

shr al, 1

jc .xx?1_1111

; xxx0_1111 EOL

add di, 6 ; data is 42 bytes wide, backBuffer is 48

jmp .nextByte

.xx?1_1111:

shr al, 1

jc .xx11_1111

; xx01_1111 LM MM ML

mov al, [bx + 8]

stosb

mov al, [bx + 9]

stosb

stosb

mov al, [bx + 10]

stosb

jmp .nextByte

.xx11_1111: ; EOD

retFROCK AND ROLL. Delivers around 3.5:1 compression, specific to this data -- AND it blits fast enough to be acceptable on the Jr.

Once encrypted, it became apparant with the EOL's in place how often the map repeats itself. With the above decoder actually working out faster than I need, I started thinking on how to reduce the redundancies in the data... finally I just said "fine, we'll use a lookup table for each line"

Code:

segment CONST

mapLineList:

dw mapLineData00, mapLineData01, mapLineData02, mapLineData02

dw mapLineData02, mapLineData02, mapLineData02, mapLineData03

dw mapLineData04, mapLineData04, mapLineData04, mapLineData04

; etc, etc, etc for 93 lines total

dw 0 ; end of scanlinesThe line data being something like this:

Code:

mapLineData00:

db 0x02, 0xFB, 0x4B, 0x02, 0x0F

mapLineData01:

db 0x27, 0x4D, 0x04, 0x4D, 0x37, 0x0F

mapLineData02:

db 0x57, 0x26, 0x1F, 0x26, 0x47, 0x0F

mapLineData03:

db 0x57, 0x06, 0x11, 0x06, 0x07, 0x15, 0x06, 0x1F

db 0x06, 0x15, 0x17, 0x06, 0x11, 0x06, 0x47, 0x0F

mapLineData04:

db 0x57, 0x04, 0x07, 0x08, 0x17, 0x04, 0x17, 0x0A

db 0x17, 0x04, 0x1F, 0x04, 0x07, 0x0A, 0x07, 0x04

db 0x07, 0x08, 0x17, 0x04, 0x47, 0x0FI make the last value in mapLineList 0 as a easy out.

Changing the above decoder to handle this was simple.

Code:

; ASSUMES:

; ds:si points to mapLineList

; es:di points to backBuffer

; bx points to normalMap or whiteMap

xor cx, cx

.nextLine:

lodsw

or ax, ax

jz .done

mov dx, si ; since we don't "need" dx, use it instead of a push

mov si, ax

xor ax, ax

.nextByte:

lodsb

shr al, 1

jc .xxxx_xx?1

; xxxx_xxx0 skip

add di, ax

jmp .nextByte

.xxxx_xx?1:

shr al, 1

jc .xxxx_x?11

; xxxx_xx01 repeat LL

mov cl, al

mov al, [bx + 6]

rep stosb

jmp .nextByte

.xxxx_x?11:

shr al, 1

jc .xxxx_?111

; xxxx_x011 repeat HH

mov cl, al

mov al, [bx + 7]

rep stosb

jmp .nextByte

.xxxx_?111:

shr al, 1

jc xxx?_1111

; xxxx_0111 table lookup one byte

xlat

stosb

jmp .nextByte

.xxx?_1111:

shr al, 1

jc .xxx1_1111

; xxx0_1111 EOL

add di, 6 ; data is 42 bytes wide, backBuffer is 48

mov si, dx

jmp .nextLine

.xxx1_1111: ; LM MM MM ML

mov al, [bx + 8]

stosb

mov al, [bx + 9]

stosb

stosb

mov al, [bx + 10]

stosb

jmp .nextByte

.done:

retFDidn't impact performance significantly, but reduced the data size another ~40%... net result is basically taking 3,822 bytes of data, and reducing it to 632 bytes. UAH... though I'm still arguing with myself over push/pop on SI and using DX to store HHLL for the repeat blits. I'm not sure those handful of [bx + #] calculations on those two runs is worth the effort.

Converting it to write to screen being as simple as adding a "inc di" after each stosb, unrolling the REP into a LOOP of same, and increasing the EOL addition to 76 (since the screen is 160 bytes wide).

I also went a little further and added more 'unique' multi-byte runs to the xxxx1111 list... specifically there are a LOT of cases where L00L or 0LL0 show up and reducing those to a single byte shaved a few more bytes off it.

I did try adding another shift to allow for stosw instead of stosb, but the runs aren't long enough for that extra code and overhead to actually pay off where it's needed. A lot of optimizations I'd make on larger data sets seem to be working out like that.

Using similar methods to draw the pellets and build the map used by the actual game engine for collisions has reduced the memory footprint of the game around 8k and made it run far, far faster.

For example the pellet encoding is even simpler:

xxxx xxx0 == skip x

xxxx xx01 == repeat 0x70 0x07 (0x0770) skip 1

.... .011 == 0x07 skip 1

.... 0111 == 0x70

I render a normal pellet at the super-pellet locations, since those have their buffer offset and screen offsets hardcoded due to their blink animations. Storing 0x0770 in DX speeds this up a bit (so I'm not using immediates inside the loop), though really there's no reason to speed up how fast the pellets are drawn since they're only done at the start of each level.

The playmap encoding is similar as well.

xxxx xxx0 == wall x

xxxx xx01 == pellets x

xxxx x011 == empty x

.... 0111 == super pellet

.... 1111 == ghost wall (2 bytes)

Even simpler. The values written:

empty 0x00

pellets 0x01

super pellet 0x03

wall 0x80

ghost wall 0xC0

Lets me quickly test for walls by checking the sign flag, or pellets with bit 0 using a simple "and al, 0x83" followed by jz "empty" and js "wall", the drop-through being "eat a pellet" which then also drops-through to "empty" to allow navigation.

Much like with the pellets the live gameplay map only needs to be written at the start of each level, so optimization efforts went more towards code and data size than they did speed... even though laughably this is far faster than what paku 1.x is doing.

Though with the unexpanded 128k Jr. as my current target minimum, smaller code size most of the time does mean faster, even when it's slower when run on a real PC. I'm hoping these changes will be enough to let the unmodified low-ram Junior run the game as good as a real PC from the same codebase. If it doesn't reach that goal I'm going to probably have to make a Junior specific version using Trixter's Jr. specific mode, since the linear screen buffer would reduce the overhead of blitting a LOT... and I could use page flipping instead of a manual backbuffer. HOPING I won't have to do that though as I'd like 2.0 to be self contained.

I'm also playing with my joystick read routine to make it a bit better on the Jr. Turns out the interrupt problem isn't entirely what's wrong with how I was doing it, it was just alleviating problems in the 'dead zone' common when you have a low return value as 'center'. Pretty much if the center is less than 10, I need to make sure the dead zone is only 3 wide.

Code:

; procedure stickUpdate;

pProcedure stickUpdate

mov dx, 0x201

mov cx, stickLimit

xor ax, ax

mov bx, ax

mov di, ax

mov si, ax

mov ah, [stickMask]

push bp

mov bp, bx

cli

; using SP for zero inside the loop increases speed ~20 clocks per loop

mov es, sp ; since we can't use the stack, we put sp into es

mov sp, bx

out dx, al

.loop:

in al, dx

and al, ah

ror al, 1

adc bx, sp

ror al, 1

adc di, sp

ror al, 1

adc si, sp

ror al, 1

adc bp, sp

or al, al

loopnz .loop

mov sp, es

sti

mov [stick0x], bx

mov [stick0y], di

mov [stick1x], si

mov [stick1y], bp

pop bp

retfStickmask is determined during startup as to which 4 axis to test. Doing an "and' of it's value against what's read from the port masks off ports that aren't connected. Side effect of the detection code I use for this is that when machines are 'too fast' for this stick reader the joystick is disabled. (that's actually a good thing!)

In any case, just thought I'd share what I've been up to and get it down somewhere. Sometimes just writing it down helps with debugging and thinking on new ways of handling things.

Also, never hurts to have another set of eyes on things. Any suggestions are more than welcome.

Last edited: