voidstar78

Veteran Member

I recently came across an old poster I had that was in an old issue of CrossTalk magazine (about 20 years ago), which was a summary of "all" programming languages since the 1950s.

It looks like CrossTalk faded away around 2017, but during its run they had some thick articles about software development tools and processes, and the management thereof - as particularly related to the defense industry.

theqalead.com

theqalead.com

For anyone interested, I've scanned the poster to make a digital version. I'll host a large and small resolution version of it on github:

github.com

github.com

This was a large wall poster, so some of the creases during the scan have faded some entries.

It establishes FORTRAN as the "first" high level language (I'm curious why they did "proper case" on "Fortran" but used upper-case on COBOL -- recall, FORTRAN is Formula Translation).

APL has no branches. One "tragedy of history" (in my opinion) is that the ASCII standard didn't include APL symbols. APL was formulated in 1960, and ASCII "somewhat ratified" by 1963 - APL was still new, and so to was the ASCII standard itself. I can't recall which institutions were involved in those two efforts - but if some collaboration had ended up making APL symbols a part of that standard (or at least part of the extended ASCII), perhaps APL would have seen greater adoption.

I see "B" on the chart, but not "D". I never really kept up with either of those.

As I finished college in the late 1990s, I recall going to the Compute Science shelves at a Borders bookstore and realizing how much the industry and software-development field had grown. Beyond the "classic" high level programming languages, now there was an explosion of "web programming" technology to help "glue" things together - along with hearing about Microsoft's NGWS stuff (precursor to what became .NET). The term "full stack developer" hadn't quite yet become mainstream, and (at the time) we considered "web programmers" as "script kiddies" (i.e. not real programmers) -- that was mostly directed to HTML and CGI.

One thing lacking in this poster is references to assembly language. Kathleen Booth passed away late last year, but she (and in collaboration with her husband) is attributed to developing the first concept of an assembler - some of that work starting around 1947 and the concept fairly established and practiced certainly by 1949. Prior to this, recall that one "programmed" a computer by re-wiring it.

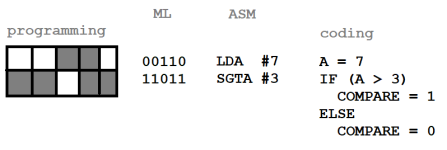

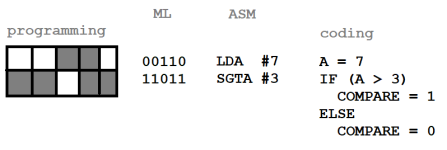

Consider:

One could do "programming" using literal flags, like was historically used in coordinating ship navigation and band marches -- in other words, humankind has been "programming" systems for centuries (that is, defining a set of movements or actions in response to an indicator/instruction). In digital computing, programming can be expressed using wires plugged into a system. That wiring indicates sequences that get translated to operations. Once we had electronic memory, that sequencing could be encoded into a binary sequence (machine language) in some media -- and think that marked the beginning of "software" (no longer "hard-wired" but "soft"/malleable by adjusting memory-content).

It wasn't a far stretch to symbolically abbreviate that machine language ("assembly language"), which was especially helpful for indicating branching (but required a two-pass process to resolve the branch targets when converting the assembly into machine language).

In the above, it is just some hypothetical "opcode" or instruction - and in those early days, there was a lot of experimentation on defining instruction set (basically the RISC vs CISC approaches - those terms came later, but it was a debate between a few simple general instructions or complicated but featureful instructions -- or some hybrid in between). In the above, SGTA is a hypothetical that sets a COMPARE register after evaluating a given value with REGISTER A. Before microprocessors, elaborate electrical components "brute-force" implemented those instructions (hence lots of logic boards - with lots of heat, power consumption, and failure rates).

Anyway, when I hear someone say "I'm a programmer" -- I assume that means they're familiar with assembly and machine-code :D That's not really the case these days. I think these days, a better term is "coder" or "software developer." Very few actual programmers (well, except MCU and demoscene, or embedded/drivers development ofc).

And here is the smaller version for reference:

It looks like CrossTalk faded away around 2017, but during its run they had some thick articles about software development tools and processes, and the management thereof - as particularly related to the defense industry.

What Happened To CrossTalk, The Journal Of Defense Software Engineering?

What could have happened to CrossTalk? Here, we explore the history of the publication and take a look at the 10 most important articles it published.

For anyone interested, I've scanned the poster to make a digital version. I'll host a large and small resolution version of it on github:

GitHub - voidstar78/EvolutionOfProgrammingLanguagesPoster: A poster overviewing the evolution of programming languages since 1950.

A poster overviewing the evolution of programming languages since 1950. - voidstar78/EvolutionOfProgrammingLanguagesPoster

This was a large wall poster, so some of the creases during the scan have faded some entries.

It establishes FORTRAN as the "first" high level language (I'm curious why they did "proper case" on "Fortran" but used upper-case on COBOL -- recall, FORTRAN is Formula Translation).

APL has no branches. One "tragedy of history" (in my opinion) is that the ASCII standard didn't include APL symbols. APL was formulated in 1960, and ASCII "somewhat ratified" by 1963 - APL was still new, and so to was the ASCII standard itself. I can't recall which institutions were involved in those two efforts - but if some collaboration had ended up making APL symbols a part of that standard (or at least part of the extended ASCII), perhaps APL would have seen greater adoption.

I see "B" on the chart, but not "D". I never really kept up with either of those.

As I finished college in the late 1990s, I recall going to the Compute Science shelves at a Borders bookstore and realizing how much the industry and software-development field had grown. Beyond the "classic" high level programming languages, now there was an explosion of "web programming" technology to help "glue" things together - along with hearing about Microsoft's NGWS stuff (precursor to what became .NET). The term "full stack developer" hadn't quite yet become mainstream, and (at the time) we considered "web programmers" as "script kiddies" (i.e. not real programmers) -- that was mostly directed to HTML and CGI.

One thing lacking in this poster is references to assembly language. Kathleen Booth passed away late last year, but she (and in collaboration with her husband) is attributed to developing the first concept of an assembler - some of that work starting around 1947 and the concept fairly established and practiced certainly by 1949. Prior to this, recall that one "programmed" a computer by re-wiring it.

Consider:

One could do "programming" using literal flags, like was historically used in coordinating ship navigation and band marches -- in other words, humankind has been "programming" systems for centuries (that is, defining a set of movements or actions in response to an indicator/instruction). In digital computing, programming can be expressed using wires plugged into a system. That wiring indicates sequences that get translated to operations. Once we had electronic memory, that sequencing could be encoded into a binary sequence (machine language) in some media -- and think that marked the beginning of "software" (no longer "hard-wired" but "soft"/malleable by adjusting memory-content).

It wasn't a far stretch to symbolically abbreviate that machine language ("assembly language"), which was especially helpful for indicating branching (but required a two-pass process to resolve the branch targets when converting the assembly into machine language).

In the above, it is just some hypothetical "opcode" or instruction - and in those early days, there was a lot of experimentation on defining instruction set (basically the RISC vs CISC approaches - those terms came later, but it was a debate between a few simple general instructions or complicated but featureful instructions -- or some hybrid in between). In the above, SGTA is a hypothetical that sets a COMPARE register after evaluating a given value with REGISTER A. Before microprocessors, elaborate electrical components "brute-force" implemented those instructions (hence lots of logic boards - with lots of heat, power consumption, and failure rates).

Anyway, when I hear someone say "I'm a programmer" -- I assume that means they're familiar with assembly and machine-code :D That's not really the case these days. I think these days, a better term is "coder" or "software developer." Very few actual programmers (well, except MCU and demoscene, or embedded/drivers development ofc).

And here is the smaller version for reference:

Last edited: