Quite! Do you need one? Shipping would be high but I do have several PC and XT compatibles that could use a home -- I also have some bare motherboards kicking around, that would be reasonable to ship and you could just use any regular AT power supply with them.

Thanks for the offer but, as you said, shipping from the US would be high. Way too high. (Yes, I'm cheap.

I personally do not see the point of getting to the bottom of Norton SI's buggy code. If Norton SI is crashing because the disk controller is too fast, what's the solution? Make the BIOS slower? I feel alecv's quest is folly.

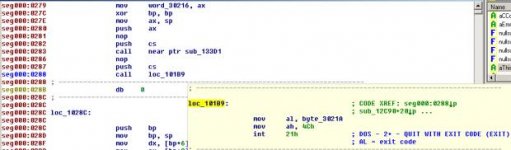

Well, it could still be Bochs' fault I suppose but yeah, it certainly looks like NU SI is to blame. When looking at the code in IDA there are lots of odd stuff like this for example;

BTW, Happy Birthday Trixter!