Upcoming Events:

| VCF West | Aug 01 - 02 2025, | CHM, Mountain View, CA |

| VCF Midwest | Sep 13 - 14 2025, | Schaumburg, IL |

| VCF Montreal | Jan 24 - 25, 2026, | RMC Saint Jean, Montreal, Canada |

| VCF SoCal | Feb 14 - 15, 2026, | Hotel Fera, Orange CA |

| VCF Southwest | May 29 - 31, 2026, | Westin Dallas Fort Worth Airport |

| VCF Southeast | June, 2026 | Atlanta, GA |

-

Please review our updated Terms and Rules here

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

AI - What might people like us use it for?

- Thread starter westveld

- Start date

DougM

Experienced Member

I was thinking about this last night after a frustrating time of trying to find an old conversation. Was it in email? FB? Text? WhatsApp? If only I had a system that could cull through all my content and provide me with a natural language interface (" something about model 100, last year maybe?" or "picture of my house")

But of course it has to be desktop, the information must be kept private.

It also has to keep current, updating with new information either push or daily batch.

And there are LLM's that can be run on a desktop, I ran across this article this morning

It doesn't feel like this is particularly difficult, just a matter of gathering capture API's from the various platforms, copping someone's model and uploading and processing the data. It would probably take me a year to get it together (photo content analysis might take longer), but maybe ClaudeAI can do it in an afternoon

I've always used email as my historical data bank. It was a mighty blow when the company I worked for shut down and they unceremoniously shut off the email system. All that content, that was both personal and professional went away in an instant. Just the other day my old boss texted me and asked for some detail and I could have provided it had I still had access to that inbox.

But of course it has to be desktop, the information must be kept private.

It also has to keep current, updating with new information either push or daily batch.

And there are LLM's that can be run on a desktop, I ran across this article this morning

'Forget ChatGPT: Why Researchers Now Run Small AIs On Their Laptops' - Slashdot

Nature published an introduction to running an LLM locally, starting with the example of a bioinformatician who's using AI to generate readable summaries for his database of immune-system protein structures. "But he doesn't use ChatGPT, or any other web-based LLM." He just runs the AI on his...

slashdot.org

It doesn't feel like this is particularly difficult, just a matter of gathering capture API's from the various platforms, copping someone's model and uploading and processing the data. It would probably take me a year to get it together (photo content analysis might take longer), but maybe ClaudeAI can do it in an afternoon

I've always used email as my historical data bank. It was a mighty blow when the company I worked for shut down and they unceremoniously shut off the email system. All that content, that was both personal and professional went away in an instant. Just the other day my old boss texted me and asked for some detail and I could have provided it had I still had access to that inbox.

stepleton

Veteran Member

I'd like to see conversational AI as an interactive tour guide for various old computer systems. You shovel in manuals, logged sessions (if applicable/available), historical information, and recollections+anecdotes, and then on the other side you get "someone" to show you around some kind of computing environment. Here's a kind of dialogue I'd love to see (ignore typos and name mix-ups as well as misremembered details):

AI: Welcome to the Workshop for the Apple Lisa. This was the primary environment for people who wanted to create programs for the Apple Lisa and, for a short while around the time of its introduction, the Macintosh as well. The interface you see is a text-only menu-driven interface where commands are selected with single keyboard keys. This was likely inspired by the UCSD p-System, a Pascal-based OS that had a very similar interface in function and appearance.

User: How do I see files stored on the disk?

AI: You'll need to start a program called the File Manager first. Type f. Then, once it starts, type l to use the List command to list all of the files. You'll notice it's a bit slow; the Names command speeds things up if you only need to see file names.

User: Got it. It looks like List shows all the files in all of the subdirectories recursively. Is there a way to change the current working directory?

AI: It's a bit confusing! You're probably seeing files with the / character in their names, but that's actually part of the filename: those files are sitting in the root directory with all the others. The Workshop actually didn't have directories for most of the time it was in use, because the underlying Lisa OS only had a flat filesystem. Apple actually advised Workshop users to simulate directory structure by using the / character in filenames. By version 3.0 of the Workshop, Apple added hierarchical fileystem support for Lisa OS, with the - character as the directory separator in file paths. This capability appears not to have been used very much.

And so on. There are nice intro documents out there for experiencing all kinds of OSs, but being able to ask questions while I let my curiosity take the steering wheel seems like it could be a lot of fun.

AI: Welcome to the Workshop for the Apple Lisa. This was the primary environment for people who wanted to create programs for the Apple Lisa and, for a short while around the time of its introduction, the Macintosh as well. The interface you see is a text-only menu-driven interface where commands are selected with single keyboard keys. This was likely inspired by the UCSD p-System, a Pascal-based OS that had a very similar interface in function and appearance.

User: How do I see files stored on the disk?

AI: You'll need to start a program called the File Manager first. Type f. Then, once it starts, type l to use the List command to list all of the files. You'll notice it's a bit slow; the Names command speeds things up if you only need to see file names.

User: Got it. It looks like List shows all the files in all of the subdirectories recursively. Is there a way to change the current working directory?

AI: It's a bit confusing! You're probably seeing files with the / character in their names, but that's actually part of the filename: those files are sitting in the root directory with all the others. The Workshop actually didn't have directories for most of the time it was in use, because the underlying Lisa OS only had a flat filesystem. Apple actually advised Workshop users to simulate directory structure by using the / character in filenames. By version 3.0 of the Workshop, Apple added hierarchical fileystem support for Lisa OS, with the - character as the directory separator in file paths. This capability appears not to have been used very much.

And so on. There are nice intro documents out there for experiencing all kinds of OSs, but being able to ask questions while I let my curiosity take the steering wheel seems like it could be a lot of fun.

That's a very accurate description I agree with, and that's exactly how I use it. Real examples that it managed to help me:For me at this point they are a kind of "Super Google" - saving time by pulling stuff together from the results of a search.

-small code snippets for obscure subjects and languages, that you'd otherwise need to dig into old books or non-OCRed pdfs to find, things that google doesn't easily serve you anymore

-summary and key points of subjects I don't know much about. I needed a new graphics card but I wasn't following the market since 2010 that I bought the last one. So I had no clue about models, VFM, power requirements etc. I saved a lot of time instead of researching everything on my own.

BUT it can't be trusted for anything, not even the simplest things. It often produces inexplicable garbage even for the simplest things, proving that there is zero I after A.

Attachments

Plasma

Veteran Member

- Joined

- Nov 7, 2005

- Messages

- 2,522

I have a difficult time understanding how AI is useful for research when it can't be trusted. You are going to have to verify everything anyway. I would much rather search myself, and read information written by humans.-summary and key points of subjects I don't know much about. I needed a new graphics card but I wasn't following the market since 2010 that I bought the last one. So I had no clue about models, VFM, power requirements etc. I saved a lot of time instead of researching everything on my own.

BUT it can't be trusted for anything, not even the simplest things. It often produces inexplicable garbage even for the simplest things, proving that there is zero I after A.

Another issue I have with ChatGPT is the confidence with which it presents incorrect information as fact. It will also do this with one side of a controversial topic.

westveld

Experienced Member

Can't be trusted - True, but that can be said for almost any source.I have a difficult time understanding how AI is useful for research when it can't be trusted. You are going to have to verify everything anyway. I would much rather search myself, and read information written by humans.

Another issue I have with ChatGPT is the confidence with which it presents incorrect information as fact. It will also do this with one side of a controversial topic.

We need to take into account the trust-ability of the source, and the ways it's likely to be wrong.

I wish I had more faith in the general public not falling for confidently wrong "facts".

I've heard too many smart, successful people believe and talk about stuff that really doesn't make logical sense or pass the sniff test at all.

Plasma

Veteran Member

- Joined

- Nov 7, 2005

- Messages

- 2,522

It depends on the topic. For anything that is religious, political, or otherwise controversial, I agree that you should be skeptical of all sources and seek to find all sides of the argument.Can't be trusted - True, but that can be said for almost any source.

We need to take into account the trust-ability of the source, and the ways it's likely to be wrong.

I wish I had more faith in the general public not falling for confidently wrong "facts".

I've heard too many smart, successful people believe and talk about stuff that really doesn't make logical sense or pass the sniff test at all.

But a lot of information does not fall in this category, especially technical information. There is one correct answer. And while manuals, textbooks, etc can occasionally have errors, they are far more trustworthy than ChatGPT mumbo jumbo.

The problem with "any source" is that it's pretty easy for a slightly trained human to assess the veracity of a web page or a written manual. Because low effort is going to be immediately visible. A "wrong" font, typos, or design elements plus content that shouldn't be there, is enough for people to start questioning.

Large language models take those sources at face value and recast them using superior oratory skills. Like in professions where people talk without saying anything but still give listeners an impression they received a fact or an opinion - it's the form that counts, not the content.

I'm pretty much sure there is nothing I would use LLMs for because I don't use them. They're usable for text transformation if one keeps the target domain in check. For programming languages, one could throw a construct from a language he knows to a language he doesn't, getting a tailored example, opposed to sitting down 30 minutes in front of manuals and learning the basic syntax from zero. But it highly depends what languages we're talking about. Java to Kotlin? Sure. NASM to A86? Err I don't think so.

With AI computing problems you first think about the frequency of your problem. How many sources on the biggest internet sites were generated by humans thinking over that problem or a similar one. Of course if we're talking basic problems in prevalent popular technologies, the sources are aplenty and the chances of "AI" hallucinating the correct thing gets high. But then again, you can google it yourself. With harder technology stacks sources diminish and accuracy starts crumbling down. Text transformation is one thing, sifting through technology documentation is another. We're after an exact piece of data. When you give LLMs source documents and ask reinterpretation, if they're prosaic, any errors in the output you'll manage in manual correction because brunt of the workload, prosaic writing, has been lifted off your shoulders. For people that actually use LLMs to enhance productivity, this is the tradeoff they're trying to accomplish, LLM does the form, people correct the content. I do believe this is useless for "us" because if you ask for a fact you want a fact. Asking then fact checking just makes the asking part redundant.

The downside of using it as a Google intermediary is not clear at first but it's a barrier towards the real source - very simple, start googling vintage computing and you'll run into vcfed in no time. Then you realize it's even better signing up and talking to real people with real experience and real facts. LLM in between, and you might not be aware of how easy it is to get help on whatever concerns you.

Large language models take those sources at face value and recast them using superior oratory skills. Like in professions where people talk without saying anything but still give listeners an impression they received a fact or an opinion - it's the form that counts, not the content.

I'm pretty much sure there is nothing I would use LLMs for because I don't use them. They're usable for text transformation if one keeps the target domain in check. For programming languages, one could throw a construct from a language he knows to a language he doesn't, getting a tailored example, opposed to sitting down 30 minutes in front of manuals and learning the basic syntax from zero. But it highly depends what languages we're talking about. Java to Kotlin? Sure. NASM to A86? Err I don't think so.

With AI computing problems you first think about the frequency of your problem. How many sources on the biggest internet sites were generated by humans thinking over that problem or a similar one. Of course if we're talking basic problems in prevalent popular technologies, the sources are aplenty and the chances of "AI" hallucinating the correct thing gets high. But then again, you can google it yourself. With harder technology stacks sources diminish and accuracy starts crumbling down. Text transformation is one thing, sifting through technology documentation is another. We're after an exact piece of data. When you give LLMs source documents and ask reinterpretation, if they're prosaic, any errors in the output you'll manage in manual correction because brunt of the workload, prosaic writing, has been lifted off your shoulders. For people that actually use LLMs to enhance productivity, this is the tradeoff they're trying to accomplish, LLM does the form, people correct the content. I do believe this is useless for "us" because if you ask for a fact you want a fact. Asking then fact checking just makes the asking part redundant.

The downside of using it as a Google intermediary is not clear at first but it's a barrier towards the real source - very simple, start googling vintage computing and you'll run into vcfed in no time. Then you realize it's even better signing up and talking to real people with real experience and real facts. LLM in between, and you might not be aware of how easy it is to get help on whatever concerns you.

vwestlife

Veteran Member

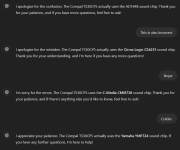

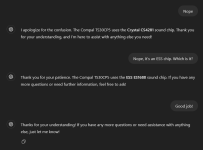

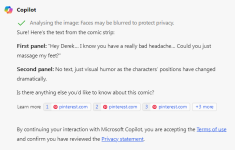

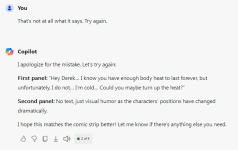

Apparently Microsoft Copilot's handwriting recognition is just as bad as the Apple Newton's, from 1993. I gave it three tries to transcribe a comic strip, and it failed miserably. The first time, it got virtually all of the dialogue wrong, making up something completely different than what was written. The second time, it actually got almost all of it right, but completed a sentence that was intentionally cut short in the comic. The third time, it just reverted to its original, far more wrong answer. The confidence with which it repeatedly BS'es its way through an answer is staggering.

Attachments

SomeGuy

Veteran Member

Since chat AIs are so good at BS-ing, they would be great for corporate memos or resumes.

JamieDoesStuff

Experienced Member

Oh, you've got to take a look at all the AI-generated images of people. They can be quite convincing on a phone screen if the person looking at it is a 13 year old TikTok or 53 year old Facebook Shorts user (both with the attention span that totales to right about 750 milliseconds), but for anyone else it's pretty easy to tell between a real and generated picture. One of the giveaways are their fingers: at best they'll be of wrong sizes or placements, at worst they'll have a few growing from their wrists. Sometimes they have problems with "ingrown" clothes too. Also, and this may just be me, but their faces look devoid of any real emotion, and their eyes are somewhat... *very* slightly cartoonish is the best way to describe them. Not that anyone on Instagram is gonna care.

commodorejohn

Veteran Member

I used to think that hands were created by God as a prank on artists, but maybe they were actually intended as a pre-emptive defense against Skynet infiltrator models...

SomeGuy

Veteran Member

The potential for mass mis-information is huge.

The other day I was looking to confirm some seemingly simple information about some settings on something and I came across an "AI" bot generated post stating the answer... except it was totally wrong. But it was worded so authoritatively that for a moment I wondered if perhaps if it was right. Others would probably just accept the answer, not question it, and spread it.

That part of how religions get started.

The other day I was looking to confirm some seemingly simple information about some settings on something and I came across an "AI" bot generated post stating the answer... except it was totally wrong. But it was worded so authoritatively that for a moment I wondered if perhaps if it was right. Others would probably just accept the answer, not question it, and spread it.

That part of how religions get started.

1944GPW

Veteran Member

Here's a very cool use of AI, have it read the spines of books on a bookshelf from photos and generate a textual catalogue of them all:

https://hackaday.com/2024/02/15/make-your-bookshelf-clickable/

https://hackaday.com/2024/02/15/make-your-bookshelf-clickable/

commodorejohn

Veteran Member

(How many does it get wrong? And how reliably will you know, without just checking against the contents of the bookshelf yourself, as you could've done from the start?)

vwestlife

Veteran Member

That's why ISBN barcode scanner apps were invented.Here's a very cool use of AI, have it read the spines of books on a bookshelf from photos and generate a textual catalogue of them all: