Eudimorphodon

Veteran Member

Been mulling over some ideas to resurrect a stalled "homebrew computer" project lately, and among the ideas I'm considering is changing horses in midstream to making a sorta-TRS-80 compatible instead of a sorta-PET compatible. (Mainly because the "market", so to speak, for Commodore respins seems kind of saturated right now.) This means switching to the Z80 CPU from the 6502, obviously. As it was my project had gotten far enough to at least demo sharing the same memory (ROM and RAM) between the memory-mapped video generation system and the CPU itself; this is easy on the 6502 because it has that property where the CPU reliably only ever hits the bus on one phase of the clock, allowing you to do whatever you want with the opposite phase. However...

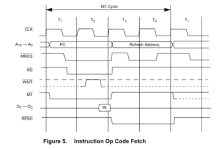

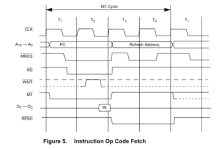

Unfortunately the Z80 isn't polite like this. For some bizarre reason according to the docs I've found the Z80 qualifies memory reads on the rising edge of a clock cycle when it's doing instruction fetches:

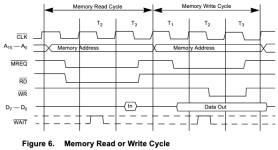

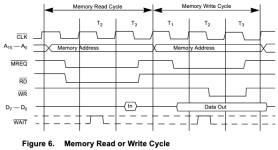

But on non-instruction reads and writes it qualifies on the falling edge?:

So clearly a simple rule like "video can have the RAM on high phases" isn't going to do the job here. If I kept video memory separate from CPU RAM then the "simple" solutions are to either let the CPU always win (and deal with screen snow) or implement a wait state generator. (Which I'm a little fuzzy on but can probably find some resources somewhere.) But I'm curious what, if any, solutions people have seen/implemented in real-world designs to manage this?

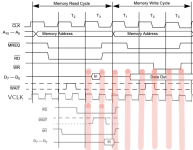

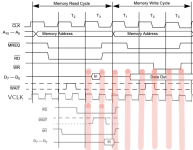

FWIW, here's the "best" idea I can come up with, and I suspect it's not that great. Long and short of it: Z80 clock is a fraction of the dot clock never higher than half. For every phase of the Z80's clock cycle the video memory gets a full tick (or more), and the multiplexer will give the bus to the video memory for the earliest fraction of the Z80's tick. This results in continuous access windows for video memory that the Z80 isn't even going to know/care are stolen from it as long as the memory devices are fast enough to react to the address bus changes in a timely manner. Here's a genuinely terrible GIMP'ed timing chart I threw together to demonstrate the concept, the video access windows are in pink:

(The fragment of chart under the VCLK line is T1/T2 from the instruction fetch chart.)

The big issue I see with this is the RAM and the multiplexing circuitry need to be lightning fast, since in this example I'm asking it to do the full access cycle on only half the pixel clock. That's going to be, what, 62.5ns for an 8mhz pixel clock? That makes the 55ns SRAM I'm using just barely fast enough for that, and realistically I need a pixel clock more like 12mhz. So... now this reads like an idea that *might* work if I used something completely supercharged like 20ns cache memory for VRAM, but it's not practical to use that for all the RAM in the system. Feh. Just talked myself out of that.

A variation of this idea is just choke down that the Z-80 effectively can't run faster than 1/4 of the pixel clock. The result is essentially the above but it divides access on full clock ticks instead of half ticks. (So imagine VCLK in that bad diagram being paced twice as fast, with two up/down transitions per T-clock phase instead of one.) Are there any deal breakers with this? If I were trying to emulate, say, a TRS-80 Model I I could use a 14mhz pixel clock (with 8 bit wide characters instead of six); a divide by 8 would give me 1.75mhz, which is pretty close to the original 1.77, and a /4 would be 3.5mhz, a decent speed bump. (A 14.7456 crystal is also a widely available option; that'd be a CPU of 1.8432 mhz, also close to the real thing, and would also offer a convenient clock for a UART...)

... Digging around it looks like the ULA in the Sinclair Spectrum does something kind of like this?(????) Except in the Sinclair's case it only holds the clock cycle long if video accesses are actually happening, otherwise everything proceeds at full speed. I'd rather avoid having the CPU run faster in the blanking area (except possibly as a select-able option) for compatibility reasons; the TRS-80 is chock full of software timing loops.

I guess I have another vague and probably bad idea about deferring video accesses to the opposite CPU clock phase if the M1 line is set when a video read is supposed to happen. IE, interleave the clock so video has the RAM on the low phases of the CPU clock, which should be compatible with normal read/writes if I'm reading the charts right, but if M1 and RD are set when the video read wants to happen it gets deferred until the next low cycle. If the output pixels for the shift register were "cached" in a latch then it'd probably be possible to get away with this and get the max CPU speed back up to pixel clock/2? Maybe?

Or maybe I should just use separate VRAM and system RAM. Just curious if this was actually doable.

Unfortunately the Z80 isn't polite like this. For some bizarre reason according to the docs I've found the Z80 qualifies memory reads on the rising edge of a clock cycle when it's doing instruction fetches:

But on non-instruction reads and writes it qualifies on the falling edge?:

So clearly a simple rule like "video can have the RAM on high phases" isn't going to do the job here. If I kept video memory separate from CPU RAM then the "simple" solutions are to either let the CPU always win (and deal with screen snow) or implement a wait state generator. (Which I'm a little fuzzy on but can probably find some resources somewhere.) But I'm curious what, if any, solutions people have seen/implemented in real-world designs to manage this?

FWIW, here's the "best" idea I can come up with, and I suspect it's not that great. Long and short of it: Z80 clock is a fraction of the dot clock never higher than half. For every phase of the Z80's clock cycle the video memory gets a full tick (or more), and the multiplexer will give the bus to the video memory for the earliest fraction of the Z80's tick. This results in continuous access windows for video memory that the Z80 isn't even going to know/care are stolen from it as long as the memory devices are fast enough to react to the address bus changes in a timely manner. Here's a genuinely terrible GIMP'ed timing chart I threw together to demonstrate the concept, the video access windows are in pink:

(The fragment of chart under the VCLK line is T1/T2 from the instruction fetch chart.)

The big issue I see with this is the RAM and the multiplexing circuitry need to be lightning fast, since in this example I'm asking it to do the full access cycle on only half the pixel clock. That's going to be, what, 62.5ns for an 8mhz pixel clock? That makes the 55ns SRAM I'm using just barely fast enough for that, and realistically I need a pixel clock more like 12mhz. So... now this reads like an idea that *might* work if I used something completely supercharged like 20ns cache memory for VRAM, but it's not practical to use that for all the RAM in the system. Feh. Just talked myself out of that.

A variation of this idea is just choke down that the Z-80 effectively can't run faster than 1/4 of the pixel clock. The result is essentially the above but it divides access on full clock ticks instead of half ticks. (So imagine VCLK in that bad diagram being paced twice as fast, with two up/down transitions per T-clock phase instead of one.) Are there any deal breakers with this? If I were trying to emulate, say, a TRS-80 Model I I could use a 14mhz pixel clock (with 8 bit wide characters instead of six); a divide by 8 would give me 1.75mhz, which is pretty close to the original 1.77, and a /4 would be 3.5mhz, a decent speed bump. (A 14.7456 crystal is also a widely available option; that'd be a CPU of 1.8432 mhz, also close to the real thing, and would also offer a convenient clock for a UART...)

... Digging around it looks like the ULA in the Sinclair Spectrum does something kind of like this?(????) Except in the Sinclair's case it only holds the clock cycle long if video accesses are actually happening, otherwise everything proceeds at full speed. I'd rather avoid having the CPU run faster in the blanking area (except possibly as a select-able option) for compatibility reasons; the TRS-80 is chock full of software timing loops.

I guess I have another vague and probably bad idea about deferring video accesses to the opposite CPU clock phase if the M1 line is set when a video read is supposed to happen. IE, interleave the clock so video has the RAM on the low phases of the CPU clock, which should be compatible with normal read/writes if I'm reading the charts right, but if M1 and RD are set when the video read wants to happen it gets deferred until the next low cycle. If the output pixels for the shift register were "cached" in a latch then it'd probably be possible to get away with this and get the max CPU speed back up to pixel clock/2? Maybe?

Or maybe I should just use separate VRAM and system RAM. Just curious if this was actually doable.